Opening Thoughts

With the growing complexity and automation of cyber threats, relying solely on traditional red teaming approaches is no longer enough. AI-driven red teaming is emerging as a transformative shift, offering organizations the ability to test their defenses with more realism, depth, and frequency. In this blog, we explore how enterprises can leverage this cutting-edge strategy to stay one step ahead of adversaries.

Breaking Down the Concept

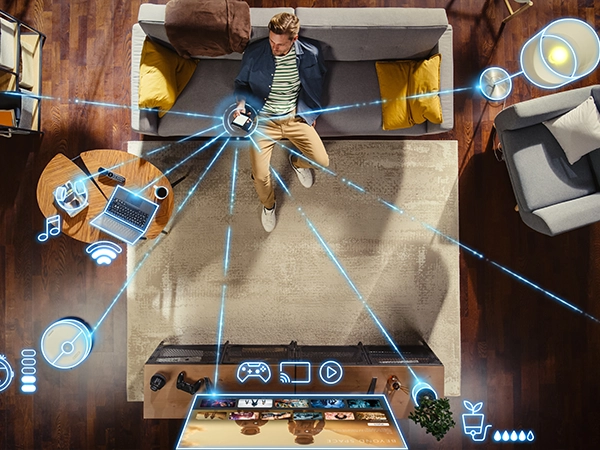

AI-powered red teaming involves deploying autonomous systems to mimic cyberattacks. These agents, powered by advanced machine learning and agentic AI, are capable of:

- Probing systems persistently without fatigue.

- Learning from responses and modifying strategies in real-time.

- Navigating through networks, cloud services, and IoT devices efficiently.

This represents a leap forward from periodic manual simulations, introducing smarter and continuous attack emulation.

Why Now? What Makes It Essential?

- Nonstop Threat Pressure: Modern systems face around-the-clock risks. AI enables constant simulations to match that pace.

- Smarter Adversaries Require Smarter Defenses: Attackers are leveragingautomation—so should defenders.

- Enhanced Visibility: AI can illuminate multi-layered attack paths that might otherwise go undetected.

- Resource Optimization: Fewer human resources are needed to run comprehensive tests at a scale.

How Enterprises Can Get Started

Instead of diving straight into tools, organizations should begin by aligning goals and building internal alignment. Here’s a phased approach:

- Step 1: Build a Cyber-Aware

Culture Encourage awareness at all levels, technical and non-technical. From SOC teams to executive leadership, everyone should understand the role and scope of autonomous threat simulations.

- Step 2: Define Clear Boundaries and Use Cases

Set the parameters for your AI agents. Determine which systems can be tested, when, and under what constraints. Establish oversight and rollback protocols.

- Step 3: Explore the Right Technology Stack

Whether leveraging platforms like MITRE CALDERA or commercial offerings such as SafeBreach and AttackIQ, ensure the tools integrate well with your existing ecosystem. For specialized needs, consider developing custom AI agents trained on your unique infrastructure.

- Step 4: Collaborate Across Teams

This isn’t just a red team initiative. Integrate feedback loops between red, blue, and purple teams to continually refine detection capabilities.

- Step 5: Operationalize and Measure

Use data from simulations to inform threat modeling, update security controls, and guide budgetary and staffing decisions. Capture insights in a structured format for executive and audit consumption.

Safety & Governance

As enterprises adopt AI-driven red teaming, it’s crucial to embed safety and governance principles early. Establish clear accountability for simulation outcomes, ensure ethical boundaries are respected, and maintain transparency across teams. Governance frameworks should be adapted to include AI-specific risks, and simulation data must be securely stored and reviewed periodically to prevent misuse.

Real-World Applications and Lessons

Several industry leaders have already adopted AI-driven red teaming with significant success:

- Microsoft launched an AI Red Team that has performed over 80 internal assessments, targeting both cybersecurity flaws and ethical AI risks. Their tools like “Counterfit” and internal threat modeling frameworks have helped them preempt real-world threats before products reach customers.

Lesson Learned: Early detection of ethical and security flaws prevents downstream risks.

- Google, through its Secure AI Framework (SAIF), operationalized AI red teaming by simulating prompt injection and data misuse attacks across its AI-enabled services. One such test uncovered a vulnerability in their AI enhancedsearch, prompting security reinforcements.

Lesson Learned: Proactive testing reveals vulnerabilities in AI-enhanced services.

- CrowdStrike recently rolled out a red team-as-a-service offering for GenAIproducts, helping clients proactively simulate model theft, poisoning attacks, and inference manipulation, focusing especially on enterprise LLM integrations.

Lesson Learned: Tailored simulations help secure enterprise LLM deployments.

- In the financial sector, a global services firm used HiddenLayer’s red teaming platform to test its AI fraud detection engines. The system revealed input manipulation tactics that could bypass fraud flags, allowing the firm to refine models and improve fraud response accuracy.

Lesson Learned: Input manipulation tests improve model robustness and accuracy.

Hypothetical Illustration: Smart Port Authority

Picture an AI red team agent deployed in a smart logistics hub. It identifies a vulnerable IoT controller managing cargo schedules. Without alerting defenses, it pivots to internal operations and simulates data exfiltration. Such nuanced scenarios help organizations anticipate how breaches might unfold and what gaps need immediate attention.

Reinforcing the Defense Line

- Deploy behavioral anomaly detection to spot unexpected agent actions.

- Enrich your threat intelligence ecosystem with red team-derived insights.

- Update incident response runbooks based on findings from simulations.

Governance and Compliance Dimensions

While AI-red teaming is innovative, it must be governed responsibly. Ensure alignment with existing standards (e.g., ISO 27001, NIST CSF), and document the outcomes of simulations for regulatory, legal, and audit reviews.

Final Reflection

AI in red teaming is not a distant vision, it’s an available, actionable upgrade. The ability to simulate dynamic, adaptive threats continuously gives enterprises a strategic advantage. It’s no longer just about whether you can defend against known attacks, but whether your organization can anticipate and adapt in the face of evolving adversarial tactics.

Action Checklist: 5 Strategic Next Steps for AI Red Teaming

- 1. Establish Governance Protocols

Define clear roles, responsibilities, and ethical boundaries for AI red teaming initiatives. Ensure oversight mechanisms are in place.

- 2. Align with Compliance and Standards

Map simulations to regulatory frameworks such as ISO 27001, NIST CSF, and internal audit requirements to ensure legal defensibility.

- 3. Integrate Insights into Threat Modeling

Use findings from AI-driven simulations to enhance threat models, update detection rules, and refine incident response playbooks.

- 4. Enable Cross-Functional Collaboration

Train security, engineering, and risk teams to interpret and act on red team outputs. Foster continuous feedback loops across functions.

- 5. Continuously Evolve AI Agents

Regularly update and retrain autonomous agents to reflect emerging threat vectors, adversarial tactics, and infrastructure changes.